When Video Becomes Intelligence: The AI Visual Frontier Reshapes Everything

Sora 2, Claude 4.5, and the platform war for synthetic media dominance

Welcome to this week’s AI Top Tools Weekly — where your unfair advantage in AI insight is the norm, not the goal.

This week, something shifted. The frontier of AI is no longer just text or code — it’s motion, vision, sound, and identity. Sora 2 dropped. Claude 4.5 crept past durability thresholds. Agent orchestration is now a baseline expectation. And underneath, the battles over rights, authenticity, and platform control are heating up.

If you’re building a product, shaping content, or running a team that’s trying to ride the wave — you need more than headlines. You need a map through the unfolding terrain.

Here’s how today’s issue is structured:

In the free section (below):

A deep dive on Sora 2 — what it is, why it matters, and how it changes choices

The context: how Claude 4.5 is raising the bar for agentic work

A survey of the shifting landscape: where AI video collides with IP, trust, and platform power

Tool shots & tactical ideas you can test now

In the premium section:

The full AI video platform playbook — how to plug in (or fight back)

Drop-in agent architectures tailored for multimodal + video contexts

A real enterprise use case: how one team reduced video costs by 80% using AI pipelines

Hidden tools and prompt frameworks no one’s talking about

Forecasts and insider signals for 2026 in synthetic media

If you upgrade in the next 7 days, you get 10% off for 12 months — zero fluff, deep value.

Let’s get into it.

Breakthrough of the Week: Sora 2 — AI Video, But Now a Platform

Let’s start here: Sora 2 is not just an improved model. It is OpenAI’s bold move to make video generation social, remixable, identity‑aware, and monetizable.

🧱 What’s new in Sora 2

From the official system card and announcements:

Synchronized audio + video: prior models struggled with perfect lip sync and ambient audio; Sora 2 bridges that gap. (OpenAI)

Better physics and realism: more accurate motion, lighting consistency, object interaction. (OpenAI)

Expanded stylistic range + steerability: you can push stylization, cinematic framing, or realism depending on the prompt. (OpenAI)

Identity “cameo” insertion: users can insert themselves (or someone else, with consent) into generated videos.

App + social feed layer: Sora now has a native app — users create, share, remix. The model is not isolated; it’s embedded in a feed ecosystem. (Venturebeat)

Tiered access: free usage limits for everyone; “Sora 2 Pro” quality for ChatGPT Pro users. (Venturebeat)

IP / rights updates: OpenAI had to revise its copyright policy due to backlash. They are scaling back the opt-out approach and giving rights holders more control. (Business Insider)

Rapid virality & controversy: It became #1 in the App Store, but also drew fire for misuse and weak guardrails. (Business Insider)

In short: Sora 2 is aiming to be the YouTube / TikTok of AI video — not just a model you call via API.

📈 Why Sora 2 matters more than you think

Let me frame three core shifts:

From tool ⇒ platform

Video models used to be B2B tooling. Now, Sora is a social product: creation + consumption + remixing in one stack. That changes incentives, power, and control.From prompt-to-video to narrative + identity experiences

The ability to insert identity (you, your avatar) means storytelling becomes personal. Brands, creators, and users will begin to think in terms of “my synthetic presence,” not just synthetic content.From adoption to lock-in

Once you start publishing and accumulating views in a feed, your attention, community, data, and identity get locked into that ecosystem — unless you can export or interoperate. OpenAI now occupies that space by default.

🧩 Use-case sketches (today)

Even with limited access, here’s how Sora 2 is already reshaping thinking:

Micro-branded content farms: create 5–10 video variants daily, test which style or persona beats, and iterate.

Synthetic influencers / avatars: build consistent prompt styles & voices, then clone or animate them per campaign.

Interactive storytelling: allow users to fork or remix your brand video, creating a “living gallery” of derivative works.

Use identity to personalization: send “yourself in the future” style videos as onboarding, marketing hooks, user journeys.

Complementary Breakthrough: Claude Sonnet 4.5 — The Agent Just Got Smarter

If Sora 2 is the video frontier, Claude 4.5 is the agent frontier. Last week, Anthropic released Claude Sonnet 4.5, and it’s pushing boundaries of sustained, tool-using intelligence. (Anthropic)

🔍 What’s upgraded

Autonomous endurance: internally, Anthropic says Sonnet 4.5 ran for 30+ hours on complex tasks. (Ars Technica)

Better OS / tool use: it leaps ahead in “computer competence” tasks, working with spreadsheets, APIs, shell commands more reliably. (Anthropic)

Enhanced alignment & safety: fewer misaligned or malicious outputs in sensitive areas like political influence, child content, etc. (CyberScoop)

Expanded integration: Sonnet 4.5 is now available via Amazon Bedrock, Microsoft Copilot Studio, GitHub Copilot, etc. (Microsoft)

Better context / memory: improved management of large interaction histories and tool orchestration. (Anthropic)

📊 Why Sonnet 4.5 is critical

In the agent wars, raw bursts of power aren’t enough. The battleground is long-horizon, coherent, tool-enabled workflows. Sonnet 4.5 is staking a claim there.

What this means:

You can feel safer delegating mid-length tasks (few hours) to agents.

The margin between “good enough to prototype” and “good enough to deploy” shrinks.

Teams that invest in agent orchestration tooling (or open agent hubs) will see outsized leverage.

🧪 Tactical ideas to test now

Take one internal workflow (e.g. weekly report, analysis, code refactor) and run it under 4.5 — compare divergence, error drift, manual patching.

Swap out your current LLM in multi-agent orchestration (if you have one) for Sonnet 4.5 and measure stability over time.

Build side-by-side “video agent + reasoning agent” chains: e.g. a script agent feeding Sora video agent, then a review agent.

Stress test safety boundaries: prompt ethically ambiguous tasks (within policy) and observe consistencies or failures.

The Landscape: Video, Agents, and the Platform War

To make sense of Sora and Claude in isolation is to miss the larger tectonics. Let’s frame the terrain:

🗺️ Converging trends

Multimodal agents

Models will increasingly need vision, audio, video, and memory — not just text. The frontier is unified agents. Sora 2, Claude 4.5, and future models will overlap in capability.Platform + feed control = power

Owning the recommendation, monetization, and identity stack gives leverage not just over creators, but over who wins in the attention economy.Content authenticity & trust become battlegrounds

The more realistic synthetic media becomes, the more trust, provenance, verification, watermarking, and rights enforcement will matter.Rights markets & licensing protocols

As remixing becomes default, content owners will demand better opt-in/opt-out tools, license marketplaces, and tracking.Regulation and policy fights intensify

Synthetic media will be front and center in debates over deepfakes, national security, AI transparency, and platform responsibility.

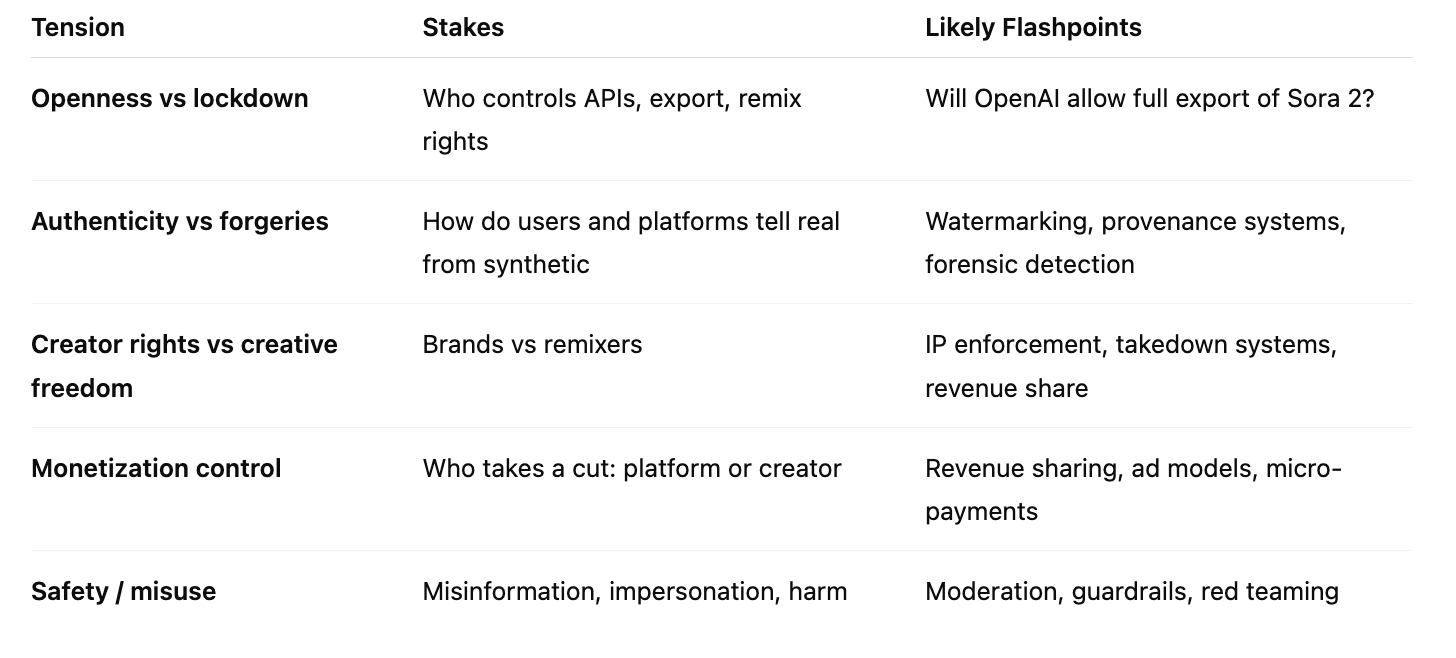

🔮 Key tensions to watch

Tool Shots & Tactical Ideas

Here are tools and prompts you can plug in today — even if you don’t have full access to Sora 2 or Sonnet 4.5:

🛠 Tools & combos to try

Azure + OpenAI Video Generation: Microsoft’s quickstart uses Sora via Azure APIs. Great for experimental pipelines. (Microsoft Learn)

Runway / Pika / Veo: fallback generative video tools to prototype storyboarding, test styles, compare output fidelity.

Prompt chaining: Use GPT-4 (or Claude Sonnet 4.5) to generate video scripts → pass to video models → analyze output → re-prompt.

Watermark & metadata embedding: always append a hidden tag or token in every synthetic asset so provenance tracking is possible downstream.

Diff / variant generation tools: produce several variants of the same prompt (e.g. lighting, camera, style) and A/B test which resonates.

Feed simulators: build mini internal feeds to test video virality (e.g. rank reactions, remix depth).

✍ Sample prompt templates

Brand promo

“A 10‑second vertical cinematic scene showing our product in use: soft lighting, shallow depth, a hand holding it, gentle motion, warm color grade.”

User cameo

“Insert me (photo provided) walking through a futuristic city at dusk, ambient music, smooth transitions.”

Remix starter

“Take this base video (link) and remix it into a 3-second teaser with glitch transitions, dark theme, synthwave audio.”

Narrative snippet

“Show a split-screen: left side ‘past me’ in office, right side ‘future me’ in flying car, voiceover: ‘This could be your evolution.’”

Agent-to-video chain

“Step 1: GPT should write a 5‑scene storyboard. Step 2: feed scenes into video model. Step 3: analyze output and produce variant.”

Test 5 prompts each per day; flag the ones promising enough to double down.

Risks, Guardrails & Mitigation

The frontier is not without danger. As synthetic video becomes mainstream, here are the most urgent risks:

⚠️ Misinformation & deepfakes

We’re already seeing AI-generated content depicting mass shootings, war scenes, public figures saying things they never said. (The Guardian)

As realism improves, seeing is no longer believing. Trust collapses, systems get gamed.

🧩 IP & brand abuse

Remix + identity insertion = easy impersonation or brand misuse.

OpenAI is already backtracking on opt-out policies because content owners pushed back. (Business Insider)

🚫 Platform lock-in and censorship

Once creators are embedded in Sora’s ecosystem, platform policy changes or takedowns can silently eliminate reach or content.

If export is limited, you lose sovereignty over your creative assets.

🗳 Political & social risk

Synthetic media could be used in campaigns, elections, propaganda, harassment at scale. Guardrails must scale faster than adoption.

🧠 Disinformation fatigue & distrust

If audiences can no longer trust visuals, the value of video as a persuasive medium erodes. That’s a systemic risk for creators.

Mitigations you should build now:

Embedded provenance: watermark, invisible hashes, blockchain-backed metadata

Traceability tools: allow users to query whether a video is synthetic and by which model

Rights management protocols: let IP owners opt in / opt out granularly

Transparency labeling: mandate “AI-generated” labels in feeds

Red teaming & adversarial testing: build models to intentionally break content, then patch

What You Should Do This Week

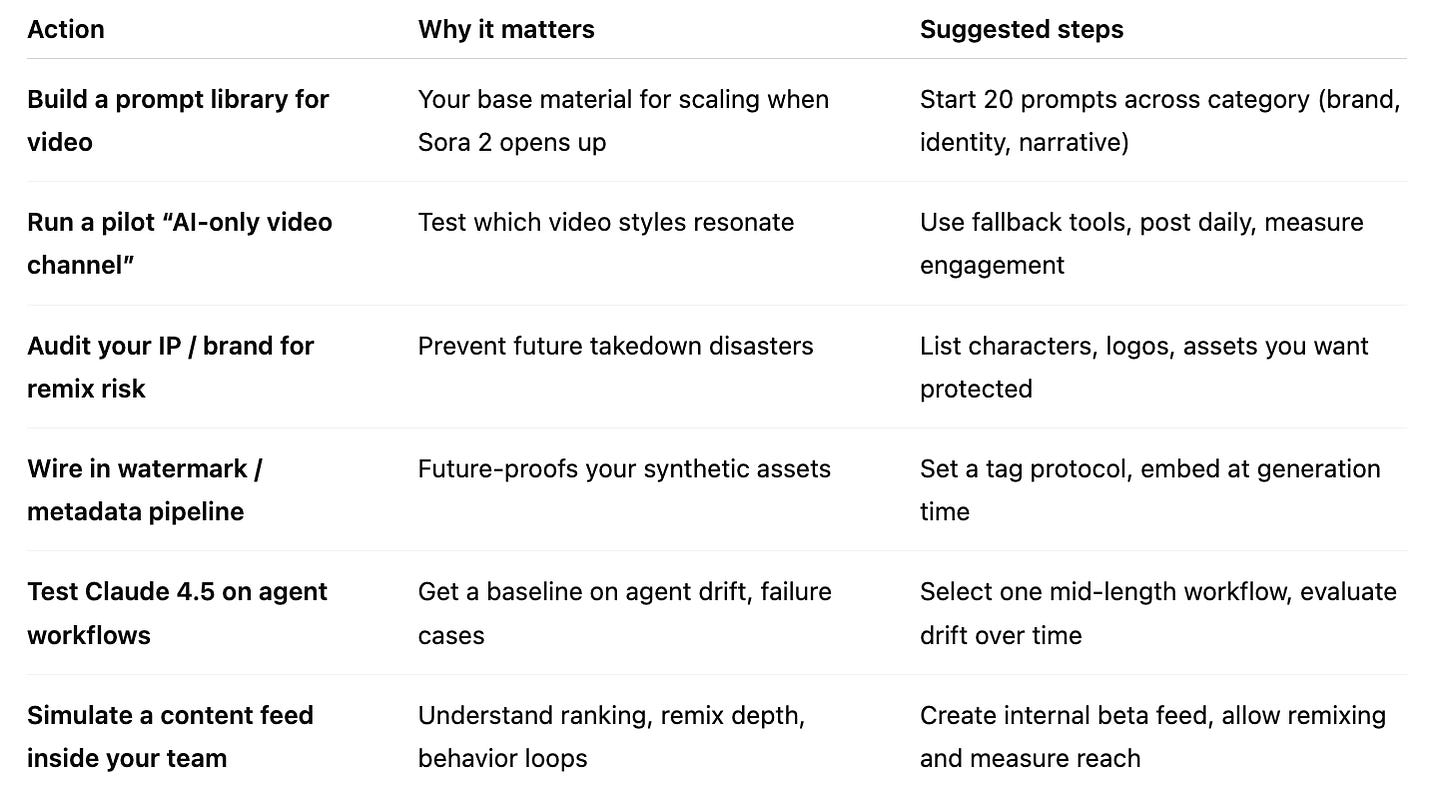

Here’s a tactical checklist — not abstract, but what you can do in next 7 days — to get ahead rather than catch up:

🚀 Final Thoughts Before the Paywall

This week’s launches — Sora 2 and Claude Sonnet 4.5 — aren’t incremental. They signal a turning point. AI is migrating into time + motion.

If you treat video as a second-class citizen in your strategy, you risk being disrupted. If you act now — building prompt systems, infrastructure, identity layers, and trust systems — you position yourself not as a subscriber, but as a contender.